You’ll be able to see there is a new tab under SQL Agent, called Notebook Jobs. You should also have a new SQL Agent Job. Once you get through the step above, you should have two new tables in whichever database you choose as your Storage Database. The rest of the options are pretty standard too, so I won’t go through those. This is pretty similar to what you would do when scheduling any T-SQL script.

In this case, I’m choosing to start BPCheck.ipynb ( Best Practices Check from the SQL Tiger Team) in the master database. The next option, Execution Database, is where the SQL Notebook will start running from. You’re also choosing where it will store the results from that SQL Notebook every time you run it.

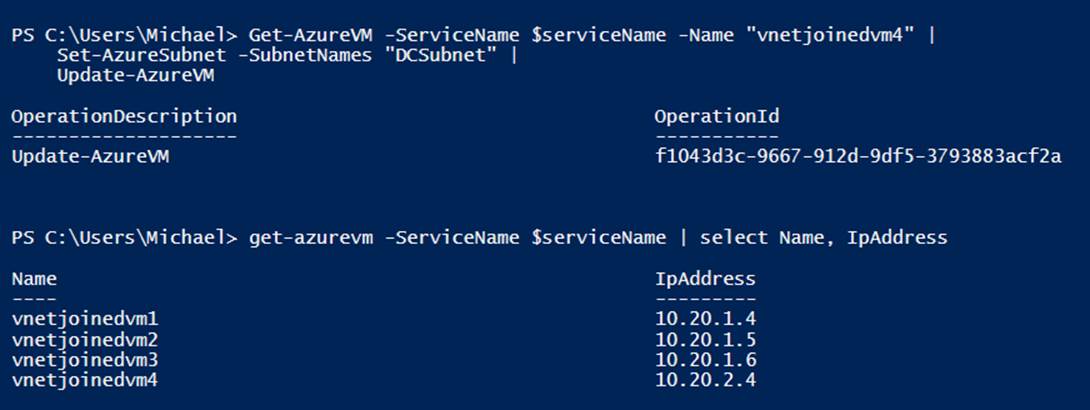

When you choose your Storage Database you’re choosing where SQL Server will store the SQL Notebook that you’re currently scheduling. First, you’ll see an option for the Storage Database, this is a key part which is new. You’re presented with a few options and this varies a little from your typical SQL Agent Job, so let’s dig in a little. After that, you are present with a side panel asking you some specifics about how you want to configure your SQL Notebook to run. Let’s start by taking a look at when you click that “Schedule Notebook” button in Azure Data Studio which brings up a dialog asking you which instance of SQL Server you want to schedule this SQL Notebook on. Scheduling SQL Notebooks in Azure Data Studio This past Friday (September 20 th, 2019) a new version of the SqlServer PowerShell module was posted to the Gallery, with a new Invoke-SqlNotebook cmdlet. Earlier this month, the Insiders build of Azure Data Studio received the ability to add SQL Notebooks in SQL Agent. There are two new options for automating your SQL Notebooks with your SQL Servers.

0 kommentar(er)

0 kommentar(er)